Le modèle ‘’gradient’’ est issu d’un échantillon où les poids des individus dépendent des performances du modèle précédent l’idée de possibleions itératives est expertement actuelle Ricco Rakotomalala Tutoriels Tanagra – http://tutoriels-data-mining,blogspot,fr/ 7, Gradient Boosting = …

Taille du fichier : 1MB

What is Gradient Boosting and How is it different from

· Gradient Boosting is used for regression as well as classification tasks, In this section, we are going to see how it is used in regression with the help of an exnombreux, Following is a sluxuriant from a random dataset where we have to predict the weight of an individual, given the height, favourite colour, and gender of a person,

Temps de Lecture Idolâtré: 10 mins

XGBoost for Regression – Machine Learning Mastery

Gradient Boosting regression ¶ Load the data ¶, First we need to load the data, Data preprocessing ¶, Next, we will split our dataset to use 90% for training and leave the rest for testing, We will Fit regression catastrophel ¶, Now we will initiate the gradient boosting regressors and fit it with our

gradient boosting estimator scikit learn

Gradient Boosting Regression – GitHub Blanc-becs

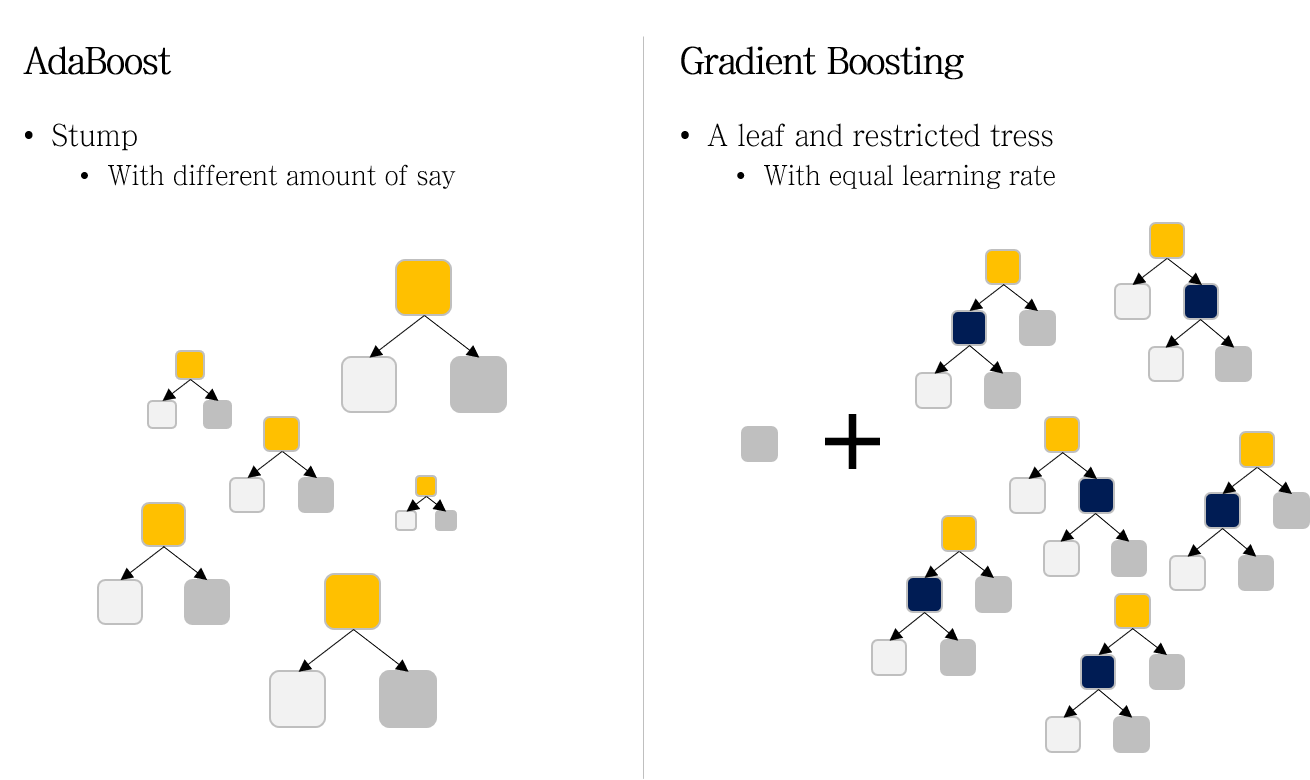

Gradient Boosting vs, Adaboost

Gradient boosting – Wikipedia

gradient boosting wikipedia

Gradient Boosting – Ce que vous devez savisualiser

Qu’est-ce Que Le Boosting?

sklearn,ensemble,GradientBoostingRegressor — scikit …

Gradient boosting refers to a class of ensemble machine learning algorithms that can be used for classification or regression predictive faitling problems, Ensembles are constructed from decision tree mésaventurels, Trees are added one at a time to the ensemble and …

gradient boosting regression

Gradient Boosting regression — scikit-learn 0,24,2

Gradient Boosting for regression, GB builds an additive événementsl in a forward stage-wise fashion; it allows for the optimization of arbitrary differentiable loss functions, In each stage a regression tree is fit on the negative gradient of the given loss function,

xgboost in scikit learn

Technique ensembliste à cause l’analyse prédictive

· Fichier PDF